Archive

AI – rescuing the spectrum crunch (part 3)

Part 1 of this post was all about the reasons why DARPA was right when it assigned a Grand Challenge to try and tackle the looming spectrum crises. Part 2 talked about the operating scenarios – on how this could probably pan out in practice. In this concluding chapter I will attempt to determine the impact for both traditional and new players.

_________________________________________________________________

For the regulator, it brings the “uberization” concept right to his doorstep – promoting economic virtues of the efficient utilization of spectrum. Since spectrum could be acquired “on the fly”, the upfront payments made by operators would be replaced by transaction based income with dynamic pricing based upon supply and demand. It would dissuade spectrum hoarding and introduce a heightened level of competition – one which could benefit the end consumer.

For the operator, it would significantly level the playing field since all operators would have access to the same spectrum – one they previously acquired for exclusive use. Those operators which would successfully woo the customer would win; premium operators would be able to engage high value customers by securing access to additional spectrum and hence additional capacity. It would open up a market for newer players not willing to play that game – instead targeting lower tier customers (those who potentially do not need high speed data). The lack of upfront investment in spectrum would allow operators to efficiently allocate capital to better serve customer needs. They could also sell this capability as a service to other service providers (a.k.a. Netflix) who may need a particular QoS and throughput – and are willing to pay for it.

But the biggest impact I suspect will be for the newer entrants. All these players seek to provide a great user experience to the customer irrespective of his operator. If we take a potential case such as 4K streaming where only one provider has a majority of the spectrum – then trying to stream with another operator would result in a poorer user experience (this of course leads to a lock in scenario) . With dynamic allocation – you could simply define different tiers (and quality levels) for your users and partner with all infrastructure players (e.g. operators) to ensure that spectrum can be made available on demand for your service; thus ensuring a high quality experience (for the money you have paid).

Does it drive the operator down the bit pipe…. perhaps yes and no. While the operator would not be the face to the end customer, it would by its very nature foster a closer collaboration between the operator and the end service provider. The customer could very well be the ultimate winner – with companies pandering to best serve their needs.

With potential global ramifications – I see some very clever ideas coming out of the wood-work, and folks such as Chamath investing in this “world changing” technologies to make a difference. I just hope that the big Telcos and/ or the FCC and other regulators don’t view this as a threat to the status quo – rather an opportunity to derive additional value from an already scarce resource!

AI – rescuing the spectrum crunch (Part 1)

Chamath Palihapitiya, the straight talking boss of Social Capital recently sat down with Vanity Fair for an interview where he illustrated what his firm looked for when investing. “We try to find businesses that are technologically ambitious, that are difficult, that will require tremendous intellectual horsepower, but can basically solve these huge human needs in ways that advance humanity forward”.

Around the same time, and totally unrelated to Chamath and Vanity Fair, DARPA, the much vaunted US agency credited among other things for setting up the precursor to the Internet as we know it threw up a gauntlet at the International Wireless Communications Expo in Las Vegas. What was it: it was a grand challenge – ‘The Spectrum Collaboration Challenge‘. As the webpage summarized it – “is a competition to develop radios with advanced machine-learning capabilities that can collectively develop strategies that optimize use of the wireless spectrum in ways not possible with today’s intrinsically inefficient static allocation approaches”.

What would this be ‘Grand’? Simply because DARPA had accurately pointed out one of the greatest challenges facing mobile telephony – the lack of available “good” spectrum. In doing so, it also indirectly recognized the indispensable role that communications plays in today’s society. And the fact that continuing down the same path as before may simply not be tenable 10 – 20, 30 years from now when demands for spectrum and capacity simply outstrip what we have right now.

Such Grand Challenges are not to be treated lightly – they set the course for ambitious endeavors, tackling hard problems with potentially global ramifications. If you wonder how fast autonomous cars have evolved, it is in no small measures to programs such as these which fund and accelerate development in these areas.

Now you may ask why? Why is this relevant to me and why is this such a big deal? The answer emerges from a few basic principles, some of which are governed by the immutable laws of physics.

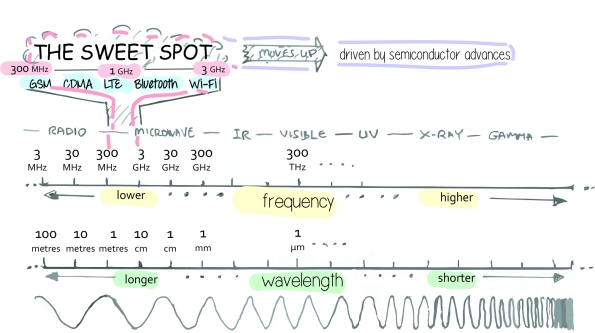

- Limited “good” Spectrum – the basis on which all mobile communications exists is a finite quantity. While the “spectrum” itself is infinite – the “good spectrum” (i.e. between 600 MHz – 3.5 GHz) or that which all mobile telephones use is limited, and well – presently occupied. You can transmit above that (5 GHz and above and yes, folks are considering and doing just that for 5G), but then you need a lot of base stations close to each other (which increases cost and complexity), and if you transmit a lot below that (i.e. 300 MHz and below) – the antenna’s typically are quite big and unwieldy (remember the CB radio antennas?)

Courtesy: wi360.blogspot.com

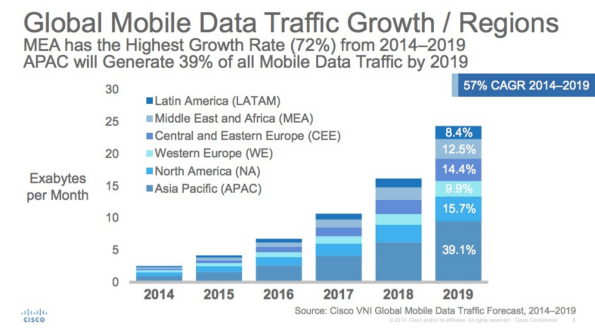

- Increasing demand – if there is one thing all folks whether regulators, operators or internet players agree upon it is this; that we humans seem to have an insatiable demand for data. Give us better and cheaper devices, cool services such as Netflix at a competitive price point and we will swallow it all up! If you think human’s were bad there is also a projected growth of up to 50 Bn connected devices in the next 10 years – all of them communicating with each other, humans and control points. These devices may not require a lot of bandwidth, but they sure can chew up a lot of capacity.

CISCO VNI

- and as a consequence – increasing price to license due to scarcity. While the 700 MHz spectrum auction in 2008 enriched the US Government coffers by USD 19.0 Bn (YES – BILLION), the AWS-3 spectrum (in the less desirable 1.7/2.1 GHz band) auction netted them a mind-boggling USD 45.0 Bn.

One key element which keeps driving up the cost of spectrum is that the business model of all operators is based around a setup which has remained pretty much the same since the dawn of the mobile era. It followed a fairly, well linear approach

- Secure a spectrum license for a particular period of time (sometimes linked to a particular technology) along with a license to provide specific services

- Build a network to work in this spectrum band

- Offer voice, data and other services (either self built) or via 3rd parties to customers

While this system worked in the earlier days of voice telephony it has now started fraying around the edges.

- Regulators are interested that consumers have access to services at a reasonable price and that a competitive market environment ensures the same. However with a looming spectrum scarcity, prices for spectrum are surging – prices which are indirectly or directly passed on to the customer

- If regulators hand spectrum out evenly, while it may level the playing field for the operator it does nothing to address a customer need – that the capacity offered by any one operator may not be sufficient… leaving everyone wanting for more, rather than a few being satisfied

- Finally, the spectrum in many places around the world remains inefficiently used. There are many regions where rich firms hoard spectrum as a defensive strategy to depress competition. In other environments there are cases when an operator who has spectrum has a lot of unused capacity, while another operator operates beyond peak – with poor customer experience. No wonder, previous generations of networks were designed to sustain near peak loads – increasing the CAPEX/ OPEX required to build up and run these networks.

In the next part of this article we will dive deeper into these issues, trying to understand how an AI enabled dynamic spectrum environment may work and in the last note point out what it could mean to the operator community and internet players at large…..

Google Fi…. a case of self service and identity

The past couple of days have been quite interesting, with the launch of Google’s MVNO service. Although I still stick to my earlier stance, there are a few interesting nuggets which are worth examining. I will not go into examining the details of the service, you can find them all over the internet – such as here, but more look at a couple of avenues which are much less talked about – which I feel are very relevant.

The first is the idea of a carrier with complete self-service. Now self service is not rocket science – but when you look at legacy firms (and yes – i mean regular Telco’s) this does account for a LOT. In the current ecosystem of cost cutting – one of the largest areas you can reduce costs is in head-count. To give you an idea what this means for a US Telco – one only needs to read news around the consolidation of call centers from both T-Mobile and Verizon. We are not talking of 100 – 200 people here, we are literally talking about thousands of people! And people cost money – and training, and well can even indulge in illegal activities like selling your social security details! If you have a very well designed self service – you could do away with most (I daresay say even all) of them and voila – you have a lean service which meets a majority of your customer needs. In this case, although you end up buying wholesale minutes/ data from operators – you have none of the hassles of operating a network, and certainly none of the expensive overhead such as a call center. No wonder you can match price with the competition – and perhaps do so at an attractive margin! Not sure if Google already has this in place, but I believe it does have the computing and software wizardry to accomplish this.

The next is in the concept of a phone number as your identity. This has been sacrosanct to operator till now – and Google has smartly managed to wiggle its way in. Now it really doesn’t make a difference what device you use – all your calls will be routed via IP – so the number is no longer “tied” to your device. Maybe in the future – your number would be no longer relevant, maybe it would only be an IP address. What would be important is your identity. Simply speaking, perhaps you could go to any device and login with your credentials – and you see the home-screen as it configured for you.. irrespective of device.

However, here I find it difficult to understand Google’s approach. For a unique identity they chose to go with Google Hangouts…. and although I don’t know usage figures – I doubt if this is a very “popular” platform to begin with. Is this Google’s way of trying to push the adoption of Google Hangouts, especially since most of its efforts around social have proven to be a lot of smoke without fire? Will this approach limit its appeal to the few who are Hangout supporters, is this the first salvo in a wider range of offerings, or will this be a one trick pony to rocket Google on the social map … only time may tell.

But one thing is certain, such services will serve to force operators to keep optimizing – using techniques such as self service to reduce costs and offer even better solutions to their customers – at better price points. Maybe in a way it is not unlike Google Fiber – it serves to push the market in the right direction rather than be a game changer on its own. That in itself – would be worthy of applause.

The dynamic pricing game – where all is not 99c

Phil Libin the effervescent CEO at Evernote made headlines two days ago when he admitted that Evernote’s pricing strategy had been a bit arbitrary and a new pricing scheme for their premium offering would be launched come 2015. Although little was said about what the approach would be – I do hope it points to adopting a flexible pricing approach, and serve as a forerunner for pricing strategies to legions of firms down the road.

To put some context, many software providers (especially app companies) have adopted a one price fits all approach; i.e. if the price for the app is $5 per month in USA, the price is $5 per month in India. The argument has typically been one of these

- Companies such as Apple do not practice price differentiation around the world, and yet they sell – so we should also be able to do the same

- Adopting a one price fits all approach streamlines our go-to-markets and avoids gaming by users

- It is unfair to users who would end up paying a premium for a product which can be sourced for a cheaper price elsewhere

From a first hand experience I believe that such attitudes have proven to be the one of the biggest stumbling blocks for firms to achieve global success. A good way to explain why is to pry apart these assertions.

- The Brand proposition argument – Although every firm would love (and some certainly do in a misguided manner) to believe that their firm has a premium niche such as Apple – harsh reality points in a different direction. There are only two brands who top $100 bn – and Apple is one of them; and no – unless you are Google, you are not the other! Even though your brand may be well known in your home of Silicon Valley, its awareness most likely diminishes with the same exponential loss as a mobile signal – its value in Moldova for example – may be close to zero. This simple truth is that there are only a handful of globally renowned brands (e.g. Apple, Samsung, BMW, Mercedes, Louis Vuitton etc) which can carry a large and constant premium around the world.

- The “avoid gaming” argument – this does have some merit, but needs to be considered in the grand scheme of things. Yes – this is indeed possible, but is typically limited to a small cross section of users who have foreign credit cards/ bank accounts etc. The vast majority are domestic users who are limited to their local accounts and app stores. The challenge here is to charge the same fee irrespective of the relative earning power in a country. While an Evernote could justify a $5 per month premium in USA (a place where the average mobile ARPU is close to $40), it is very hard to justify it in South East Asia (mobile ARPU close to $2) or even Eastern Europe (mobile ARPU close to $8). It then would simply limit the addressable market to a small fraction of its overall potential – and dangerously leave it open to other competitors to enter.

| ARPU | $12.66 | $2.46 | $11.37 | $9.20 | $48.15 | $23.88 | $8.77 |

| Region | MENA | APAC | Oceania | LatAm | USA | W.EU | E.EU |

- The unfairness argument – also doesn’t hold true. The “Big Mac Index” stands testament to the fact that price discrimination is an important element of market positioning.

Even if you argue that you cannot buy a burger in one country to sell in another, the same holds for online software – take the example of Microsoft with its Office 365 software product, same product – different country – different price.

- That brings me back to the final point – $5 per month may sound like a good deal if you are a hard core user, but if you compare it with Microsoft Office 365 – which also retails at $5 per month, it is awfully hard to justify why one would pay the same for what is essentially a very good note taking tool.

Against this background, I do welcome the frank admission that this strategy is in need of an update, and also happy to hear that the premium path isn’t via silly advertisements. Phil brought up a good challenge with his 100 year start-up and delighted to know that he still is happy to pivot like one. I do for sure hope that the other “one trick pony” start-ups learn from this and follow suit.

Al la carte versus All you can eat – the rise of the virtual cable operator

Recent announcements by the FCC proposing to change the interpretation of the term “Multi-channel Video Programming Distributor (MVPD)” to a technology neutral one has thrown open a lifeline to providers such as Aereo who had been teetering on the brink of bankruptcy. Although it is a wide open debate if this last minute reprieve will serve Aereo in the long run, it serves as a good inflection point to examine the cable business as a whole.

“Cord cutting” seems to be the “in-thing” these days with more people moving away from cable and satellite towards adopting an on-demand approach – whether via Apple TV, Roku or now – via Amazon’s devices. This comes from a growing culture of “NOW“; rather than wait for a episode at a predefined hour, the preference is to watch a favorite series at a time, place – and now device of ones choosing. Figures estimate up to 6.5% of users have gone this route, with a large number of new users not signing up to cable TV in the first place. The only players who seem to have weathered this till date are premium services such as HBO who have ventured into original content creation, and providers offering content such as ESPN – the hallmark of live sports. Those who want to cut the cord have to end up dealing with numerous content providers – each offering their own services, billing solutions etc. To put together all the services that users like, ends up being a tedious and right now – and expensive proposition.

This opens the door for what can only be known as the Virtual Cable Operator – one which would get the blessings of the FCC proposal. Such an aggregator (could be Aereo) could bundle and offer such channels without investment into the underlying network infrastructure – offering a cost advantage as much as 20% as compared to current offerings. This trend is a familiar one in the Telco business – and cable companies better be ready for this. Right now they may be safe as long as ESPN doesn’t move that route – but with HBO, CBS etc all announcing their own services, I believe it is only a matter of time. While the primary impacts to cable has extensively been covered – there are a few other consequences, and opportunities that I would like to address.

The smaller channels (those who charge <30c to the cable operators) will experience a dramatically reduced audience. Currently there was a chance that someone would stop by while channel flipping – with an al la carte service – this pretty much disappears, and along with it advertising revenue. An easy analogy would be that of an app in an app store – app discovery (in this case channel discovery) becomes highly relevant. Another result is that each channel would be jockeying for space on the “screen” – whether a TV, tablet or phone. I can very well imagine a scenario of a clean slate design like Google on a TV, where based upon your personal interest you would be “recommended” programs to watch – question is who would control this experience…

Who could this be – a TV vendor (a.k.a a Samsung – packaging channiels with the TV), an OEM (Rovio, Amazon etc.), a Telco or cable provider (if you can’t beat them – join ’em), or someone like Aereo? The field is wide open and the jury has yet to make a decision. One thing however is clear – the first with a winning proposition – including channels, pricing and excellent UI would be a very interesting company to invest in…..

Network ‘superiority’ or NOT

The last couple of weeks in the Telecom sector are what I can only phrase as ‘circus’ weeks with both the Mobile World Congress in Barcelona and CEBIT in Hanover occurring in quick succession. Companies spend weeks if not months prepping for this, with several key milestones built around the dates. My thoughts this week are primarily towards upending one of those assumptions made by the leading carriers is that network quality will be the chief differentiation – especially among the European Telcos. This is not limited to the Europeans: “We Let the Network do the Talking – said Verizon Wireless“! The question is – is this assumption right, or more succinctly put, how much longer will the ‘network superiority’ claim maintain itself?

The underlying reason has to do with two distinct factors – the cost of new technologies and increasingly active regulators. Let us examine each one independently.

The Cost: we now have LTE (call it 4G, call it whatever the marketing department cooks up!) with which you can seamlessly watch videos and other high data rate content. Users seem to love it, the number of devices are simply exploding which means that networks have to be designed for even higher demand. Since bandwidth is limited (there is only so much of it which is usable for mobile communications), LTE squeezes every bit of spectral efficiency – the only option in laymen’s term to add capacity is to place base stations closer and closer together (you can then reuse spectrum further away). That means – more base stations per sq. km and well – that translates to a quick escalation in cost. Besides an obvious fact that in a certain area there is only limited place where you can put up these sites! Operators have long realized this and in many places they already share assets (towers, sites – and in many cases – even capacity). If you add the cost of ‘winning spectrum’ in an auction then this cost is steep – many operators are not in a position to recoup, especially in countries where competition keeps driving prices down.

Key Takeaway – Increasing cost of network build-outs will make it prohibitively expensive for an operator to build out a network by itself.

The Regulator: I have been interacting with quite a few regulators in the recent past and just from a sample I see that many are moving from a reactive into a pro-active mood. This may be due to several reasons, but many authorities have enacted and enforced legislation which has led to price reductions (directly increasing consumption), and more critically to level the playing field to introduce ‘innovation’ from other – primarily OTT players. Although operators may not like it – this is a trend that is likely to continue. What this results in is that even if the operator builds his ‘super-star’ network he is forced to open it to others – thus directly dis-incentivizing him in building the network in the first place. He becomes a ‘super dumb pipe’. Other players are then able to offer attractive solutions and monetize them directly.

Key Takeaway – Active regulators make it difficult for operators to extract the best value from the network.

Where does it leave us – Operators need to think beyond the ‘network’ to determine how to survive. A leaf can be taken out of the MVNO model where players (some even as small as two people in 1 room!) are able to identify target segments and provide tailor made solutions which customers want. Future networks will allow different levels of QoS – hence this segmentation could offer differing levels of service. The future could herald the introduction of the pure ‘Net-Telco’ offering only aggregate services – similar to LightSquared, a valiant effort but which failed due to technical reasons (their spectrum was interfering with GPS users). Operators will definitely fight this, but the writing is on the wall. They need to revamp their large, inefficient organizational structures into a more lean, market oriented approach. Then – when the time to give up the ‘network’ arrives – the organization that has planned for it will come out victorious.

The disappearing SIM

If there is one piece of telecom real-estate that the operator still has a strangle hold on then it is the end customer – and the little SIM card holds all the data of the customer. Interestingly enough, that as the smart-phones continue to grow in size (some of them would definitely not fit in my pocket anymore) the SIM card appears to be following a different trajectory all-together. Apple again has been the undisputed leader in this game with the introduction of first the micro-SIM and now the nano-SIM.

Reproduced from Wikimedia Commons

To be clear here, currently this has not led to any dramatic impact upon the capabilities of the SIM. Advances in technology continue to ensure that more and more information can be squeezed into a smaller and smaller footprint. And although there have been claims that the main purpose was elegance in design and to squeeze more in the limited space that exists I do wonder if there is a long term strategic motive in this move.

Let us revisit the SIM once again; in the broader sense it is a repository of subscriber and network information on a chip. In the modern world of the apps, cloud services and over-the-top updates is there any real USP of having an actual piece of hardware embedded in the phone? This is not a revolutionary thought – in fact Apple was rumored to have thought about it a couple of years ago – and was working towards a SIM-less phone. This concept is not new – some interesting notes about how this could look like can be found back in 2009. From what I gathered was that at that time a strong united ruckus from the carriers dissuaded them from continuing the path.

Two years have passed since then, and you continue to see continued margin pressures from the operators while folks such as Apple and Samsung continue to be the darlings of Wall Street, and well – the SIM card gets smaller and smaller. How does that impact the operator – well for one, if the operators continue to bank on the actual chip as their own property perhaps this advantage would continue to slip away. This is increasingly important since although the SIM could get smaller to accommodate the traditional functions of a SIM – if you then add in other potential functionalities such as payment, security etc then at some point you do need additional real estate to include these functionalities. And at that time if the SIM is just too small, and there are a large number of handset manufacturers (who customers want) who design to this nano spec then well – the operator is out of options.

However, this ‘creative destruction’ as coined by Schumpeter is perhaps the trigger that the telecom operators need in the first place. The E-SIM is perhaps not the end of the game for them, but the advent of a new start. This would be one where software triumphs over hardware – where functionalities are developed and embedded upon multiple operating systems and customized based upon the each individual device and user capabilities. Here rather than mass producing SIMs and having processes to authorize and configure systems users could take any phones and get registered over the air, have capabilities dynamically assigned and configured to suit their specific need. In some ways – it would be the era of mass customization, which can be done efficiently, easily and seamlessly.

It would definitely be a different ball-game, and I can only speculate which way the ball would spin – but perhaps it would be in their own interests for operators to willingly embrace such a transformation rather than push back – the opportunities might simply outnumber the risk.