Archive

Infrastructure Edge: Awaiting Development

Editor’s Note: This article originally appeared on the State of the Edge blog. State of the Edge is a collaborative research and educational organization focused on edge computing. They are creators of the State of the Edge report (download for free) and the Open Glossary of Edge Computing (now an official project of The Linux Foundation).

When I began looking into edge computing just over 24 months ago, weeks would go by with hardly a whimper on the topic, apart from sporadic briefs about local on-premises deployments. Back then, there was no State of the Edge Report and certainly no Open Glossary of Edge Computing. Today, an hour barely passes before my RSS feed buzzes with the “next big announcement” around edge. Edge computing has clearly arrived. When Gartner releases their 2018 Gartner Hype Cycle later this month, I expect edge computing to be at the steepest point in the hype cycle.

Coming from a mobile operator heritage, I have developed a unique perspective on edge computing, and would like to double-click on one particular aspect of this phenomenon, the infrastructure edge and its implication for the broader ecosystem.

The Centralized Data Center and the Wireless Edge

So many of the today’s discussions about edge computing ascribe magical qualities to the cloud, suggesting that it’s amorphous, ubiquitous and everywhere. But this is a misnomer. Ninety percent of what we think of as cloud is concentrated in a small handful of centralized data centers, often thousands of miles and dozens of network hops away. When experts talk about connecting edge devices to the cloud, it’s common to oversimplify and emphasize the two endpoints: the device edge and the centralized data center, skipping over the critical infrastructure that connects these two extremes—namely, the cell towers, RF radios, routers, interconnection points, network hops, fiber backbones, and other critical communications systems that liaise between edge devices and the central cloud.

In the wireless world, this is not a single point; rather, it is distributed among the cell towers, DAS hubs, central offices and fiber routes that make up the infrastructure side of the last mile. This is the wireless edge, with assets currently owned and/or operated by network operators and, in some cases, tower companies.

The Edge Computing Land Grab

The wireless edge will play a profound and essential role in connecting devices to the cloud. Let me use an analogy of a coastline to illustrate my point.

Imagine a coastline stretching from the ocean to the hills. The intertidal zone, where the waves lap upon the shore, is like the device edge, full of exciting activities and a robust ecosystem, but too ephemeral and subject to change for building a permanent structure. Many large players, including Microsoft, Google, Amazon, and Apple, are vying to win this prized spot closest at the water’s edge (and the end-user) with on-premises gateways and devices. This is the domain of AWS Greengrass and Microsoft IoT Edge. It’s also the battleground for consumers, with products like Alexa, Android, and iOS devices, In this area of the beach, the battle is primarily between the internet giants.

On the other side of the coastline, opposite the water, you have the ridgeline and cliffs, from where you have an eagle view of the entire surroundings. This “inland” side of the coastline is the domain of regional data centers, such as those owned by Equinix and Digital Realty. These data centers provide an important aggregation point for connecting back to the centralized cloud and, in fact, most of the major cloud providers have equipment in these co-location facilities.

And in the middle — yes, on the beach itself — lies the infrastructure edge, possibly the ideal location for a beachfront property. This space is ripe for development. It has never been extensively monetized, yet one would be foolhardy to believe that it has no value.

In the past, the wireless operators who caretake this premier beachfront space haven’t been successful in building platforms that developers want to use. Developers have always desired global reach along with a unified, developer-friendly experience, both of which are offered by the large cloud providers. Operators, in contrast, have largely failed on both fronts—they are primarily national, maybe regional, but not global, and their area of expertise is in complex architectures rather than ease of use.

This does not imply that the operator is sitting idle here. On the contrary, every major wireless operator is actively re-engineering their networks to roll out Network Function Virtualization (NFV) and Software Defined Networking (SDN), along the path to 5G. These software-driven network enhancements will demand large amounts of compute capacity at the edge, which will often mean micro data centers at the base of cell towers or in local antenna hubs. However, these are primarily inward-looking use cases, driven more from a cost optimization standpoint rather than revenue generating one. In our beach example, it is more akin to building a hotel call center on a beachfront rather than open it up primarily to guests. It may satisfy your internal needs, but does not generate top line growth.

Developing the Beachfront

Operators are not oblivious to the opportunities that may emerge from integrating edge computing into their network; however, there is a great lack of clarity about how to go about doing this. While powerful standards are emerging from the telco world, Multi-Access Edge Computing (MEC) being one of the most notable, which provides API access to the RAN, there is still no obvious mechanism for stitching these together into a global platform; one that offers a developer-centric user experience.

All is not lost for the operator;, there are a few firms such as Vapor IO and MobiledgeX that have close ties to the infrastructure and operator communities, and are tackling the problems of deploying shared compute infrastructure and building a global platform for developers, respectively. Success is predicated on operators joining forces, rather than going it alone or adopting divergent and non-compatible approaches.

In the end, just like a developed shoreline caters to the needs of visitors and vacationers, every part of the edge ecosystem will rightly focus on attracting today’s developer with tools and amenities that provide universal reach and ease-of-use. Operators have a lot to lose by not making the right bets on programmable infrastructure at the edge that developers clamor to use. Hesitate and they may very well find themselves eroded and sidelined by other players, including the major cloud providers, in what is looking to be one of the more exciting evolution to come out of the cloud and edge computing space.

Friction-free: enabling happy customers

Last week, I had the good fortune to attend MBLT 2014, a conference all around the rapidly evolving mobile ecosystem. Among the many speakers at the conference, two which piqued my attention were those from Spotify & SoundCloud; both speakers were engaging, and interestingly enough – both talked about growth.

When we talk about growth here, it wasn’t all about entering new markets, or creating new products. It involved a lot of small elements; elements which when brought all together ensured that you gained a lot of users and who kept coming back. Although Spotify and SoundCloud operate in the music space, both (currently) focus on different aspect of this segment. As Andy @ SoundCloud succinctly put it; if Spotify was the Netflix of music, then SoundCloud was Youtube. However, each company seemed to have a common approach when it came to customer acquisition – to develop a friction-less sign up process.

At its core it simply meant reducing the number of clicks, mouse moves etc required to sign up a user and/ or a customer. Both agreed that if any sector was to be considered as “best practice” then it would be mobile gaming – and the king of the hill was… well, King.com. The makers of Candy Crush had got this bang on – all you had to do is download the game and you were good to go.

Their mantra was -> Number of clicks to sign up = 0. Number of users = millions!

Both SoundCloud & Spotify were always on the lookout to make the whole signup process, simpler, quicker and more efficient – fully aware of the fact that complicated processes turned away prospective users, and getting them back wasn’t a trivial matter. That point got me examining different websites to see just how much this mattered (a study done by Spotify as well). After some pottering around, I couldn’t but wholeheartedly agree with the rationale of such an approach.

If you now compare this mentality with IT solutions at traditional corporations you realize how far behind the curve they are in this respect. Many systems there are built more with the paranoia of security and little consideration for user engagement. These are what I call “push systems” – where employees have no choice BUT to use these services mandated by IT. The direct result is that either personnel shy away from using these services, or doing so becomes an irritating chore (especially since they use better designed services on a daily basis). Rather than a full scale rebellion, we are seeing services which simply assist existing ones services such as Brisk.io gaining traction because continuously updating SalesForce is tedious.

A takeaway would be for IT personnel to closely work with end users in deciding how they would like to engage with the services they use on a daily basis. Focusing on small but important elements such as “clicks to complete entries” etc will make employees more enthusiastic on using such services making these more an aid (which was the purpose) rather than a chore!

RIM or RIP

Hello readers – it has been a bit of a hiatus, but with so much happening in the industry felt it was time to dust the cobwebs and get back to some good ol’ smart-bytes once again. Today it will be as much of looking back as much as peering into the future – enjoy the read.

In September 2012, I coined in a small post postulating on the future of Blackberry – specifically that their new phones wouldn’t quite cause a dent in the market and the firm would disintegrate if it continued down that path. Fast forward one year and many of those predictions have come true – including the one that Blackberry would perhaps have to move towards a software strategy rather that stick to creating me-too devices.

The question on everyone’s mind is – well, what next; specifically who (if anyone) would buy the firm or parts of it. Over the last few days many names have been bandied about, from John Sculley, to Lenovo and even the original management among others. What is intriguing is who would command the best price – and what would their plan be?

For starters, one must realize what they are getting into. What you end up buying is a company whose back is broken – both in terms of an absence of a concrete road-map as well as the enthusiasm of its employees. The former could be fixed – the latter much more difficult unless a suitor can rally the remaining rank and file within the firm. That being said, Blackberry still has a few aces up its sleeve. It may not be the darling of the masses anymore, but in government circles it is still the preferred device of choice. If Blackberry needs a poster boy to this effect they do not need to look far – President Obama firmly holds on to his device of choice.

In my belief, this presents the first avenue for the way forward. Blackberry has excelled in one thing, and that is security – and the ability to provide it on a global basis. This goes beyond what a common WhatsApp or Viber can provide – and this what governmental agencies all over the world would always pay a premium for. If they are able to come up with a suite of services and applications (perhaps even residing on other devices), this would be immediately an attractive proposition to many. After all, who would say no to a combination of an iPhone or a Galaxy S4 equipped with the secure services of Blackberry.

The second area of opportunity is to move beyond just communications into the machine-to-machine space. Once again, it was a firm like Blackberry who managed to ink deals with carriers around the world to offer global BBM services at a flat rate. Add security and superior compression protocols to the mix then you get a world wide network where machine traffic can flow in a secure and reliable fashion. Given the ongoing trend towards cloud based m2m platforms (from the likes of Ericsson DCP, Jasper etc) the availability of such an underlying framework opens the doors to a whole new set of service providers who can offer global m2m services.

Now – it is difficult to say if either of the paths would be chosen, or if the suitors are simply interested only in its treasure trove of patents, and plan to strip the company of its assets before shuttering it down. For companies who want to enter the “mobile” space and think that Blackberry is their golden ticket – they perhaps should reconsider their options. However, it would be a pity if the company is not given the means and ability to affect a return – albeit as a different software/ service company.

For a company such as Blackberry – I still do think that the old firm still has a couple of tricks up its sleeve; if it were just given the chance and motivation to exercise this freedom.

The disappearing SIM

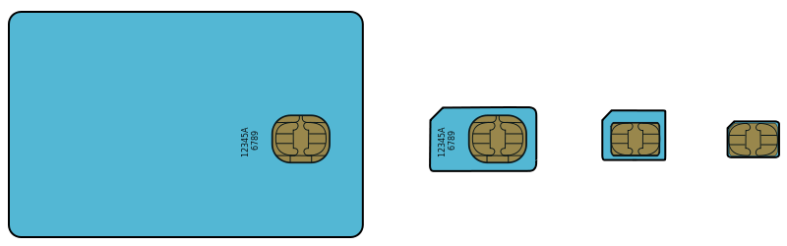

If there is one piece of telecom real-estate that the operator still has a strangle hold on then it is the end customer – and the little SIM card holds all the data of the customer. Interestingly enough, that as the smart-phones continue to grow in size (some of them would definitely not fit in my pocket anymore) the SIM card appears to be following a different trajectory all-together. Apple again has been the undisputed leader in this game with the introduction of first the micro-SIM and now the nano-SIM.

Reproduced from Wikimedia Commons

To be clear here, currently this has not led to any dramatic impact upon the capabilities of the SIM. Advances in technology continue to ensure that more and more information can be squeezed into a smaller and smaller footprint. And although there have been claims that the main purpose was elegance in design and to squeeze more in the limited space that exists I do wonder if there is a long term strategic motive in this move.

Let us revisit the SIM once again; in the broader sense it is a repository of subscriber and network information on a chip. In the modern world of the apps, cloud services and over-the-top updates is there any real USP of having an actual piece of hardware embedded in the phone? This is not a revolutionary thought – in fact Apple was rumored to have thought about it a couple of years ago – and was working towards a SIM-less phone. This concept is not new – some interesting notes about how this could look like can be found back in 2009. From what I gathered was that at that time a strong united ruckus from the carriers dissuaded them from continuing the path.

Two years have passed since then, and you continue to see continued margin pressures from the operators while folks such as Apple and Samsung continue to be the darlings of Wall Street, and well – the SIM card gets smaller and smaller. How does that impact the operator – well for one, if the operators continue to bank on the actual chip as their own property perhaps this advantage would continue to slip away. This is increasingly important since although the SIM could get smaller to accommodate the traditional functions of a SIM – if you then add in other potential functionalities such as payment, security etc then at some point you do need additional real estate to include these functionalities. And at that time if the SIM is just too small, and there are a large number of handset manufacturers (who customers want) who design to this nano spec then well – the operator is out of options.

However, this ‘creative destruction’ as coined by Schumpeter is perhaps the trigger that the telecom operators need in the first place. The E-SIM is perhaps not the end of the game for them, but the advent of a new start. This would be one where software triumphs over hardware – where functionalities are developed and embedded upon multiple operating systems and customized based upon the each individual device and user capabilities. Here rather than mass producing SIMs and having processes to authorize and configure systems users could take any phones and get registered over the air, have capabilities dynamically assigned and configured to suit their specific need. In some ways – it would be the era of mass customization, which can be done efficiently, easily and seamlessly.

It would definitely be a different ball-game, and I can only speculate which way the ball would spin – but perhaps it would be in their own interests for operators to willingly embrace such a transformation rather than push back – the opportunities might simply outnumber the risk.