Archive

Infrastructure Edge: Awaiting Development

Editor’s Note: This article originally appeared on the State of the Edge blog. State of the Edge is a collaborative research and educational organization focused on edge computing. They are creators of the State of the Edge report (download for free) and the Open Glossary of Edge Computing (now an official project of The Linux Foundation).

When I began looking into edge computing just over 24 months ago, weeks would go by with hardly a whimper on the topic, apart from sporadic briefs about local on-premises deployments. Back then, there was no State of the Edge Report and certainly no Open Glossary of Edge Computing. Today, an hour barely passes before my RSS feed buzzes with the “next big announcement” around edge. Edge computing has clearly arrived. When Gartner releases their 2018 Gartner Hype Cycle later this month, I expect edge computing to be at the steepest point in the hype cycle.

Coming from a mobile operator heritage, I have developed a unique perspective on edge computing, and would like to double-click on one particular aspect of this phenomenon, the infrastructure edge and its implication for the broader ecosystem.

The Centralized Data Center and the Wireless Edge

So many of the today’s discussions about edge computing ascribe magical qualities to the cloud, suggesting that it’s amorphous, ubiquitous and everywhere. But this is a misnomer. Ninety percent of what we think of as cloud is concentrated in a small handful of centralized data centers, often thousands of miles and dozens of network hops away. When experts talk about connecting edge devices to the cloud, it’s common to oversimplify and emphasize the two endpoints: the device edge and the centralized data center, skipping over the critical infrastructure that connects these two extremes—namely, the cell towers, RF radios, routers, interconnection points, network hops, fiber backbones, and other critical communications systems that liaise between edge devices and the central cloud.

In the wireless world, this is not a single point; rather, it is distributed among the cell towers, DAS hubs, central offices and fiber routes that make up the infrastructure side of the last mile. This is the wireless edge, with assets currently owned and/or operated by network operators and, in some cases, tower companies.

The Edge Computing Land Grab

The wireless edge will play a profound and essential role in connecting devices to the cloud. Let me use an analogy of a coastline to illustrate my point.

Imagine a coastline stretching from the ocean to the hills. The intertidal zone, where the waves lap upon the shore, is like the device edge, full of exciting activities and a robust ecosystem, but too ephemeral and subject to change for building a permanent structure. Many large players, including Microsoft, Google, Amazon, and Apple, are vying to win this prized spot closest at the water’s edge (and the end-user) with on-premises gateways and devices. This is the domain of AWS Greengrass and Microsoft IoT Edge. It’s also the battleground for consumers, with products like Alexa, Android, and iOS devices, In this area of the beach, the battle is primarily between the internet giants.

On the other side of the coastline, opposite the water, you have the ridgeline and cliffs, from where you have an eagle view of the entire surroundings. This “inland” side of the coastline is the domain of regional data centers, such as those owned by Equinix and Digital Realty. These data centers provide an important aggregation point for connecting back to the centralized cloud and, in fact, most of the major cloud providers have equipment in these co-location facilities.

And in the middle — yes, on the beach itself — lies the infrastructure edge, possibly the ideal location for a beachfront property. This space is ripe for development. It has never been extensively monetized, yet one would be foolhardy to believe that it has no value.

In the past, the wireless operators who caretake this premier beachfront space haven’t been successful in building platforms that developers want to use. Developers have always desired global reach along with a unified, developer-friendly experience, both of which are offered by the large cloud providers. Operators, in contrast, have largely failed on both fronts—they are primarily national, maybe regional, but not global, and their area of expertise is in complex architectures rather than ease of use.

This does not imply that the operator is sitting idle here. On the contrary, every major wireless operator is actively re-engineering their networks to roll out Network Function Virtualization (NFV) and Software Defined Networking (SDN), along the path to 5G. These software-driven network enhancements will demand large amounts of compute capacity at the edge, which will often mean micro data centers at the base of cell towers or in local antenna hubs. However, these are primarily inward-looking use cases, driven more from a cost optimization standpoint rather than revenue generating one. In our beach example, it is more akin to building a hotel call center on a beachfront rather than open it up primarily to guests. It may satisfy your internal needs, but does not generate top line growth.

Developing the Beachfront

Operators are not oblivious to the opportunities that may emerge from integrating edge computing into their network; however, there is a great lack of clarity about how to go about doing this. While powerful standards are emerging from the telco world, Multi-Access Edge Computing (MEC) being one of the most notable, which provides API access to the RAN, there is still no obvious mechanism for stitching these together into a global platform; one that offers a developer-centric user experience.

All is not lost for the operator;, there are a few firms such as Vapor IO and MobiledgeX that have close ties to the infrastructure and operator communities, and are tackling the problems of deploying shared compute infrastructure and building a global platform for developers, respectively. Success is predicated on operators joining forces, rather than going it alone or adopting divergent and non-compatible approaches.

In the end, just like a developed shoreline caters to the needs of visitors and vacationers, every part of the edge ecosystem will rightly focus on attracting today’s developer with tools and amenities that provide universal reach and ease-of-use. Operators have a lot to lose by not making the right bets on programmable infrastructure at the edge that developers clamor to use. Hesitate and they may very well find themselves eroded and sidelined by other players, including the major cloud providers, in what is looking to be one of the more exciting evolution to come out of the cloud and edge computing space.

The Un-network Uncarrier

Note: The article is the first of a 3-part series which talks Facebook’s Telecom Infrastructure Partnership (TIP) initiative and the underlying motivation behind the same. The next two parts are focused on the options and approaches that Operators and vendors respectively should adopt in order not to suffer the same outcome as what happened in OCP.

When T-Mobile USA announced its latest results on July 19, 2017, CEO John Legere remarked with delight – “we have spent the past 4.5 years breaking industry rules and dashing the hopes and dreams of our competitors…”. The statement rung out true – in 2013 the stock hovered at a paltry USD 17.5 and now was close to USD 70 – with 17 straight quarters of acquiring more than a 1m customers per quarter. If anything – the “Un-carrier” has yet proven unstoppable. While this track record is remarkable, my hypothesis is that in the long term the “uncarrier” impact may seem puny compared to “un-network” impact which could potentially upend the entire Telecom industry – operators and vendors alike. If you are still wondering what I am referring to – then it is TIP, the open Telecom Infrastructure Initiative started by Facebook in 2016 which now counts more than 300 members.

The motivation for TIP came on the heels of the wildly successful Open Compute Project (OCP). OCP, whose members include Apple, Google and Microsoft heralded a new way to “white-box” a data center based upon freely available specifications and a set of contract vendors ready to implement based upon preset needs. It also heralded a new paradigm (now also seen in other industries) – you didn’t need to build and own it… to disrupt it (think Uber and Airbnb). And while many may falsely be led to believe the notion that this is primarily for developing countries in the light of the Facebook goal to connect the “un-connected”, I believe the impact will be for both – operators in developed and developing countries alike. There are a few underlying reasons here.

- A key ingredient in building a world class product is to have a razor sharp focus towards understanding (and potentially forecasting) your customer/ user needs. While operators do some bit of that, Facebook’s approach raises the bar to an altogether different level. And in this analysis – one thing is obvious (something which operators also are painfully aware of); people have a nearly insatiable quest for data. A majority of this data is in the form of video; unsurprisingly video has become a major focus across all Facebook products – be it Facebook Live, Messenger Video calling or Instagram. The major difference between the developing and developed world in this respect is the expectations of what data rates to expect, and the willingness (in many cases the ability) to pay for the same.

- In the 1st world nations, 5G is not a pipe-dream anymore and has operators worried about the high costs associated in rolling out and maintaining such a network (not to forget the billions spent in license fees). In order to recoup this, there may be an impulse to increase prices – which could swing many ways; people grudgingly pay; consumers revolt resulting in margin pressure – and lastly – people simply use less data. The last option should worry any player in the video game; what if people elect to skip the video to save costs!

- Among the developing world, 5G may still be someway off, but here in the land of single digit ARPU’s, operators have a limited incentive for heavy investment given a marginal (or potentially negative ROI). On top of that there is a lack of available talent and heavy dependence on vendors – those whose primary revenue source comes from selling even more equipment and services; all increasing the end price to the consumer.

Facebook has it’s fingers in both these (advanced and developing market) pies and while its customers may be advertisers, its strength comes from the network effect created by its billion strong user base and it is essential to provide these with the best experience possible.

These users want great experiences, higher engagement and better interaction. Facebook also knows another relevant data point – that building, owning and operating a network is hard work, asset heavy and not as profitable. It has to look no further than Mountain View where Google has been scaling down its Fiber business. Truck rolls are frankly – not sexy.

It is not that operators were not aware of this issue, but traditionally lacked the knowhow needed to tackle this challenge which required deep hardware expertise. This changed with the arrival of “softwarization” of hardware in the form of network function virtualization. Operators were not software guru’s ….. but Facebook was. It recognized that by using this paradigm shift and leveraging its core software competencies it could potentially transform and potentially disrupt this market.

Operators could leverage the benefit of open source design to vastly drive down the costs of implementing their networks; vendors would no longer have the upper hand and disrupt the current paradigm of bundling software, hardware and services. Implementations could potentially result in a better user experience – with Facebook as one of the biggest beneficiaries. Rather than spend billions in connecting the world, it would support others to do so. In doing so, it would have access to infrastructure that it helped architect without owning any infrastructure. In short – it would be – the “un-network – uncarrier”.

Home Mortgage – ripe for disruption

Aaron LaRue penned an article “Why Startup’s can’t disrupt the mortgage industry” on Techcrunch yesterday where he elaborated on several factors which pointed to the fact that

- The top 200 loan officers in the country made, well way more than revenues of all the startup’s in this space

- Current attempts to disrupt this have failed to meet expectations due to challenges in integrating with legacy technologies, myriad laws and complex procedures

- A way out may be to acquire one of these smaller lenders and then work on changing the innards to better serve a customer.

Courtesy: Flickr.com

I may not be a mortgage expert – but I beg to differ. I have seen such arguments in other vertical sectors as well – and one by one, the onslaught of new technologies have brought about significant change – and even Wall Street is beginning to notice.

- Sectors which are prone (and perhaps should be disrupted) are those with

- High margins

- Antiquated technologies

- Poor customer service

- Opaque processes

- One could easily argue from reading his post that many of these points do apply. The top 200 officers may outclass any robo-originator in service, but when you realize that the median income is only $40,000 – I would daresay that the overall customer satisfaction is low (one reason I love looking at numbers – depending upon what and how you look at it, the picture could be gloomy or rosy) .

- While Aaron does rightly point out that the revenues are low, what is also clear is that these services have experienced a strong growth trajectory. In part this is also driven by its customer base – potentially a younger, savvy audience who are more attuned to a “digital approach”. While this is small, it is growing – and will be the norm in the future. As a case in point I would refer to both Wealthfront and Betterment; in both these cases their portfolios are dwarfed by giants such as Vanguard – but their astonishing growth points to what is coming. No wonder, incumbents such as Vanguard themselves have established similar Robo-advisors.

Courtesy: Wealthfront

- Another point made is to acquire a lender and then begin to innovate. I am not sure that is a wise decision, unless it comes with a ready stream of customers. An example of this would be Worldpay versus Adyen or Stripe. They all are in the payments business but the latter two have built a efficient business from the ground up – using the latest of what technology has to offer, while Worldpay – although innovative has to balance both new and legacy needs. This is hard to do so – no wonder larger players find it so difficult to compete. The new players have none of this baggage to deal with and hence are far more flexible to respond to changing business needs.

We don’t need another technology patchwork to mend what is a stodgy business. We do need a fresh look and a willingness to invest and challenge the status-quo. When hurdles present themselves – firms will find a way, if there is a clear and present need. SoFi recently launched hedge fund points to this.

We need more disruption, not less. The mortgage industry as a whole could well deserve it!

AI – rescuing the spectrum crunch (Part 1)

Chamath Palihapitiya, the straight talking boss of Social Capital recently sat down with Vanity Fair for an interview where he illustrated what his firm looked for when investing. “We try to find businesses that are technologically ambitious, that are difficult, that will require tremendous intellectual horsepower, but can basically solve these huge human needs in ways that advance humanity forward”.

Around the same time, and totally unrelated to Chamath and Vanity Fair, DARPA, the much vaunted US agency credited among other things for setting up the precursor to the Internet as we know it threw up a gauntlet at the International Wireless Communications Expo in Las Vegas. What was it: it was a grand challenge – ‘The Spectrum Collaboration Challenge‘. As the webpage summarized it – “is a competition to develop radios with advanced machine-learning capabilities that can collectively develop strategies that optimize use of the wireless spectrum in ways not possible with today’s intrinsically inefficient static allocation approaches”.

What would this be ‘Grand’? Simply because DARPA had accurately pointed out one of the greatest challenges facing mobile telephony – the lack of available “good” spectrum. In doing so, it also indirectly recognized the indispensable role that communications plays in today’s society. And the fact that continuing down the same path as before may simply not be tenable 10 – 20, 30 years from now when demands for spectrum and capacity simply outstrip what we have right now.

Such Grand Challenges are not to be treated lightly – they set the course for ambitious endeavors, tackling hard problems with potentially global ramifications. If you wonder how fast autonomous cars have evolved, it is in no small measures to programs such as these which fund and accelerate development in these areas.

Now you may ask why? Why is this relevant to me and why is this such a big deal? The answer emerges from a few basic principles, some of which are governed by the immutable laws of physics.

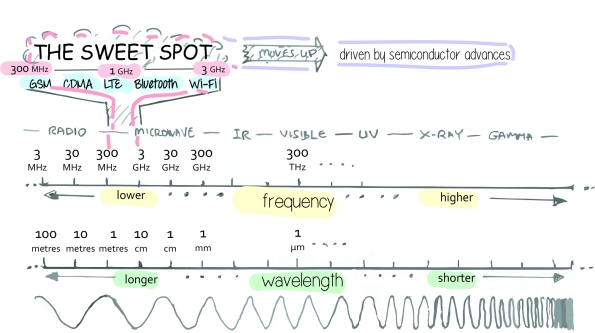

- Limited “good” Spectrum – the basis on which all mobile communications exists is a finite quantity. While the “spectrum” itself is infinite – the “good spectrum” (i.e. between 600 MHz – 3.5 GHz) or that which all mobile telephones use is limited, and well – presently occupied. You can transmit above that (5 GHz and above and yes, folks are considering and doing just that for 5G), but then you need a lot of base stations close to each other (which increases cost and complexity), and if you transmit a lot below that (i.e. 300 MHz and below) – the antenna’s typically are quite big and unwieldy (remember the CB radio antennas?)

Courtesy: wi360.blogspot.com

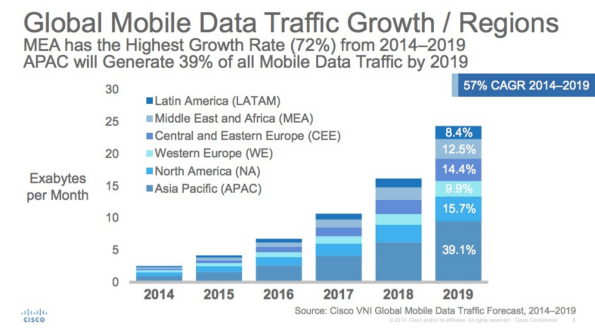

- Increasing demand – if there is one thing all folks whether regulators, operators or internet players agree upon it is this; that we humans seem to have an insatiable demand for data. Give us better and cheaper devices, cool services such as Netflix at a competitive price point and we will swallow it all up! If you think human’s were bad there is also a projected growth of up to 50 Bn connected devices in the next 10 years – all of them communicating with each other, humans and control points. These devices may not require a lot of bandwidth, but they sure can chew up a lot of capacity.

CISCO VNI

- and as a consequence – increasing price to license due to scarcity. While the 700 MHz spectrum auction in 2008 enriched the US Government coffers by USD 19.0 Bn (YES – BILLION), the AWS-3 spectrum (in the less desirable 1.7/2.1 GHz band) auction netted them a mind-boggling USD 45.0 Bn.

One key element which keeps driving up the cost of spectrum is that the business model of all operators is based around a setup which has remained pretty much the same since the dawn of the mobile era. It followed a fairly, well linear approach

- Secure a spectrum license for a particular period of time (sometimes linked to a particular technology) along with a license to provide specific services

- Build a network to work in this spectrum band

- Offer voice, data and other services (either self built) or via 3rd parties to customers

While this system worked in the earlier days of voice telephony it has now started fraying around the edges.

- Regulators are interested that consumers have access to services at a reasonable price and that a competitive market environment ensures the same. However with a looming spectrum scarcity, prices for spectrum are surging – prices which are indirectly or directly passed on to the customer

- If regulators hand spectrum out evenly, while it may level the playing field for the operator it does nothing to address a customer need – that the capacity offered by any one operator may not be sufficient… leaving everyone wanting for more, rather than a few being satisfied

- Finally, the spectrum in many places around the world remains inefficiently used. There are many regions where rich firms hoard spectrum as a defensive strategy to depress competition. In other environments there are cases when an operator who has spectrum has a lot of unused capacity, while another operator operates beyond peak – with poor customer experience. No wonder, previous generations of networks were designed to sustain near peak loads – increasing the CAPEX/ OPEX required to build up and run these networks.

In the next part of this article we will dive deeper into these issues, trying to understand how an AI enabled dynamic spectrum environment may work and in the last note point out what it could mean to the operator community and internet players at large…..

Pebble – mounting troubles in the wearable space

4 days ago, Pebble announced a massive round of layoffs with ~25% of the firm being given the pink slip. In a statement, CEO Eric Migicovsky pointed out to an increasingly difficult funding environment which limited its options to grow and expand. This was a very different situation from just over a year ago when Pebble broke not one, but two kickstarter records – raising the fastest million in 49 minutes and the highest amount in fund-raising, raising $13.3 m (a total of $20,336,930 from 78,463 people over two iterations).

As a curious onlooker into the wearable space, and keenly aware that apart from my super early adopter friends, few if any (especially outside the US) have heard about Pebble I have increasing doubts on the viability of the firm in its present state, however innovative it may have been. A few reasons point to this which I will try and illustrate.

- Flop of the Apple Watch: For all its hype the behemoth in this market, the one that everyone hope would raise the profile of wearables – Apple, has fallen way short of expectations. While Apple proudly announced the sale of 48m iPhones in 1 quarter, it declined to state the number of watch sales….. maybe nothing to crow home about. Add to the fact that Apple has itself dropped the price does not bode well for the product in its present form. While Apple can afford such failures while sitting on billions of cash, Pebble with its limited financial power and debt burden – cannot.

- Entry of competitively entrants with an established eco-system: at the recently concluded MWC in Barcelona, Chinese manufacturers such as Huawei launched several native Android wearables. These actually look quite good, and best of all – already have over 3000 apps on the Google Play store. Maybe items such as e-ink with a longer battery life like Pebble are good to have, but by now users are getting accustomed to charge their devices regularly – adding a watch to the mix isn’t a huge behavior change. While Pebble did announce an entry into India in partnership with Amazon, I fail to see how this can change their fortunes. In India – those who have money can and will buy an Apple watch; those that don’t… well they will look at their options, and for the Indian market, Pebble isn’t the cheapest smart watch around. Pebble would have to go below $40 for it base model to even hope to make a difference.

- Lack of an ecosystem: I think this is one of the biggest challenges that Pebble faces. Microsoft and Blackberry faced (and continues to face) the same challenge with its Windows phones. Without a healthy and growing ecosystem it will always be an uphill battle to attract customers. Apple’s success is testament to this – it was clear that while Apple perhaps would never match Samsung and the others in pure feature set, what set it apart was good design, intuitive UI/ UX and an expansive ecosystem. Remove one of these elements from the mix and you quickly fell to the way-side. And if you closely look at Pebble’s ecosystem it is wanting both in terms of quality (ratings) and quantity.

- Limited user benefit: this one brings the point home. Similar to the Apple watch, most people haven’t yet found compelling use cases in this space; they are “good to have”… not “must haves”. Those that are early adopters already have it, those who are followers can now consider a cheaper Apple watch, or from a plethora of Google gear at different price points. What you end up with is a niche who either hate Apple and Google or simply want to be different. Is this market big enough to support Pebble – and do so now? Or is this market still in a nascent phase and needs time – which Pebble may not have?

I do feel sorry for Pebble, I really do. Hardware is hard enough, building hardware and a viable ecosystem only compounds the problem. While it is admirable (and we do need brave firms such as these) to innovate in spite of these challenges, without a long term plan (and backing) along with a solid team I feel that Pebble is faced with an insurmountable and impossible task. The only solace I do have is for their employees (both current and past); those adventurous souls who took the path less traveled. The wearable industry will continue to grow and the skills and learning that they have gained here will make them highly sought after in an increasingly competitive environment for talent.

The dynamic pricing game – where all is not 99c

Phil Libin the effervescent CEO at Evernote made headlines two days ago when he admitted that Evernote’s pricing strategy had been a bit arbitrary and a new pricing scheme for their premium offering would be launched come 2015. Although little was said about what the approach would be – I do hope it points to adopting a flexible pricing approach, and serve as a forerunner for pricing strategies to legions of firms down the road.

To put some context, many software providers (especially app companies) have adopted a one price fits all approach; i.e. if the price for the app is $5 per month in USA, the price is $5 per month in India. The argument has typically been one of these

- Companies such as Apple do not practice price differentiation around the world, and yet they sell – so we should also be able to do the same

- Adopting a one price fits all approach streamlines our go-to-markets and avoids gaming by users

- It is unfair to users who would end up paying a premium for a product which can be sourced for a cheaper price elsewhere

From a first hand experience I believe that such attitudes have proven to be the one of the biggest stumbling blocks for firms to achieve global success. A good way to explain why is to pry apart these assertions.

- The Brand proposition argument – Although every firm would love (and some certainly do in a misguided manner) to believe that their firm has a premium niche such as Apple – harsh reality points in a different direction. There are only two brands who top $100 bn – and Apple is one of them; and no – unless you are Google, you are not the other! Even though your brand may be well known in your home of Silicon Valley, its awareness most likely diminishes with the same exponential loss as a mobile signal – its value in Moldova for example – may be close to zero. This simple truth is that there are only a handful of globally renowned brands (e.g. Apple, Samsung, BMW, Mercedes, Louis Vuitton etc) which can carry a large and constant premium around the world.

- The “avoid gaming” argument – this does have some merit, but needs to be considered in the grand scheme of things. Yes – this is indeed possible, but is typically limited to a small cross section of users who have foreign credit cards/ bank accounts etc. The vast majority are domestic users who are limited to their local accounts and app stores. The challenge here is to charge the same fee irrespective of the relative earning power in a country. While an Evernote could justify a $5 per month premium in USA (a place where the average mobile ARPU is close to $40), it is very hard to justify it in South East Asia (mobile ARPU close to $2) or even Eastern Europe (mobile ARPU close to $8). It then would simply limit the addressable market to a small fraction of its overall potential – and dangerously leave it open to other competitors to enter.

| ARPU | $12.66 | $2.46 | $11.37 | $9.20 | $48.15 | $23.88 | $8.77 |

| Region | MENA | APAC | Oceania | LatAm | USA | W.EU | E.EU |

- The unfairness argument – also doesn’t hold true. The “Big Mac Index” stands testament to the fact that price discrimination is an important element of market positioning.

Even if you argue that you cannot buy a burger in one country to sell in another, the same holds for online software – take the example of Microsoft with its Office 365 software product, same product – different country – different price.

- That brings me back to the final point – $5 per month may sound like a good deal if you are a hard core user, but if you compare it with Microsoft Office 365 – which also retails at $5 per month, it is awfully hard to justify why one would pay the same for what is essentially a very good note taking tool.

Against this background, I do welcome the frank admission that this strategy is in need of an update, and also happy to hear that the premium path isn’t via silly advertisements. Phil brought up a good challenge with his 100 year start-up and delighted to know that he still is happy to pivot like one. I do for sure hope that the other “one trick pony” start-ups learn from this and follow suit.

Al la carte versus All you can eat – the rise of the virtual cable operator

Recent announcements by the FCC proposing to change the interpretation of the term “Multi-channel Video Programming Distributor (MVPD)” to a technology neutral one has thrown open a lifeline to providers such as Aereo who had been teetering on the brink of bankruptcy. Although it is a wide open debate if this last minute reprieve will serve Aereo in the long run, it serves as a good inflection point to examine the cable business as a whole.

“Cord cutting” seems to be the “in-thing” these days with more people moving away from cable and satellite towards adopting an on-demand approach – whether via Apple TV, Roku or now – via Amazon’s devices. This comes from a growing culture of “NOW“; rather than wait for a episode at a predefined hour, the preference is to watch a favorite series at a time, place – and now device of ones choosing. Figures estimate up to 6.5% of users have gone this route, with a large number of new users not signing up to cable TV in the first place. The only players who seem to have weathered this till date are premium services such as HBO who have ventured into original content creation, and providers offering content such as ESPN – the hallmark of live sports. Those who want to cut the cord have to end up dealing with numerous content providers – each offering their own services, billing solutions etc. To put together all the services that users like, ends up being a tedious and right now – and expensive proposition.

This opens the door for what can only be known as the Virtual Cable Operator – one which would get the blessings of the FCC proposal. Such an aggregator (could be Aereo) could bundle and offer such channels without investment into the underlying network infrastructure – offering a cost advantage as much as 20% as compared to current offerings. This trend is a familiar one in the Telco business – and cable companies better be ready for this. Right now they may be safe as long as ESPN doesn’t move that route – but with HBO, CBS etc all announcing their own services, I believe it is only a matter of time. While the primary impacts to cable has extensively been covered – there are a few other consequences, and opportunities that I would like to address.

The smaller channels (those who charge <30c to the cable operators) will experience a dramatically reduced audience. Currently there was a chance that someone would stop by while channel flipping – with an al la carte service – this pretty much disappears, and along with it advertising revenue. An easy analogy would be that of an app in an app store – app discovery (in this case channel discovery) becomes highly relevant. Another result is that each channel would be jockeying for space on the “screen” – whether a TV, tablet or phone. I can very well imagine a scenario of a clean slate design like Google on a TV, where based upon your personal interest you would be “recommended” programs to watch – question is who would control this experience…

Who could this be – a TV vendor (a.k.a a Samsung – packaging channiels with the TV), an OEM (Rovio, Amazon etc.), a Telco or cable provider (if you can’t beat them – join ’em), or someone like Aereo? The field is wide open and the jury has yet to make a decision. One thing however is clear – the first with a winning proposition – including channels, pricing and excellent UI would be a very interesting company to invest in…..

In defense of Uber

A few months ago, I had penned some thoughts about the ride sharing battles taking place in Continental Europe, be it the mytaxi or the Lyft’s or the Uber’s of this world. Now, having finally moved back to the Bay I have been a liberal experimenter in all forms of the shared economy to get a better hang on how things move state-side. After having taken over 40 odd Uber/ Lyft rides and having a cheerful discussion with every driver from Moscow to San Francisco and Mexico I would like to revisit and re-examine those deductions.

In San Francisco, the traditional cab business has taken a nose-dive with the regular taxi cab companies losing close to 65% of their regular business and warnings that the viability of their enterprise was at stake. This figure was not surprising; if you’d just take a walk and look around; you would find just as many Ubers and mustachioed Lyfts as regular cabs. Armed with a tech savvy population primed to use the latest apps, and discount after discount offered by the deep pocketed Uber if I was surprised of something it would be questioning why the drop wasn’t even more dramatic!

Upon closer examination of the claim that Uber was destroying the livelihood of the taxi driver, I believe that this is a fallacy since in many locations a taxi driver (if his/ her car met Uber/ Lyft requirements) could become an Uber/ Lyft driver as well. Issues such as “Uber’s dont’ know the neighborhood, or they have not passed the KNOWLEDGE in London ” fall short since navigation technology has progressed to the point to render memorizing entire maps and routes irrelevant. Couple this to addition of user based services such as Waze and I daresay technology trumps the brain.

The two segments that ARE being affected (and consequently the loudest complainers) are the taxi-dispatch companies and the local governments. The first is simply being automated by a service which is more efficient and transparent and well, the second loses on a expensive medallion (or the permit to drive) revenue. If there is a danger to the taxi/ Uber driver in the future, is that an indiscriminate issue of too many Uber “permits” would create too high a supply and not enough economical demand to feed so many drivers. It may not impact the person who would like to make a few bucks on the side, but would turn out to be a risky proposition for those who are leasing new cars just to drive Uber. It sounds tempting to make a quick buck – but driving cabs for long hours is not for the faint hearted…

I am less concerned of issues such as market dominance and monopolization – both from a free market and regulation perspective. In the former, any attempt towards the same would see the rise of alternatives emerging to offering cheaper alternatives (since the technology itself is not unique), and in the latter – governments have time and time again shown the willingness to step in; in this case the Uber would once again become like the regulated “yellow taxis” of old.

But for now, they are here to stay – and all moves to provide efficient, safe and effective transportation to the masses must be applauded and encouraged. GO Uber!

Net Neutrality – let the “Blame Games” begin

The picture stared right back at me – it was hard to miss, with a bright red background and letter’s clearly spelled out – “Netflix is slow, because the Verizon Network is congested“. It is hard to pass a few days without yet another salvo thrown in the latest war against net neutrality. No sooner does one side claim victory, the other party files a counter-claim. This is not only a US phenomenon, but has also spread to Europe; regulators now have their backs firmly against the wall as they figure out what to do.

What is a pet peeve of mine is that although there are good, solid arguments on both sides – among the majority of comments there seems to be a clear lack of understanding of the whole situation as it stands. I am all for an open and fair market, an ardent supporter of innovation – but all this needs to be considered in the context of the infrastructure and costs needed to support this innovation.

A few facts are certainly in order

- People are consuming a whole lot more data these days. To give you an idea of what this is – here are some average numbers for different data services

- Downloading an average length music track – 4 MB

- 40 hours of general web surfing – 0.3 GB

- 200 emails – 0.8 GB, depending upon attachments

- Online radio for 80 hours per month – 5.2 GB

- Downloading one entire film – 2.1 GB

- Watching HD films via Netflix – 2.8 GB per hour

- Now consider the fact that more and more content is going the video route – and this video is increasingly ubiquitous, and more and more available in HD… this means

- Total data consumption is fast rising AND

- Older legacy networks are no longer capable or equipped to meet this challenge

Rather than to dive into the elements of peering etc for which there are numerous other well written articles I would like to ponder on three aspects.

- These networks are very expensive to build out – truck-rolls to get fiber to your house are expensive… and

- Once built out – someone has to pay

- and finally – firms are under increasing pressure from shareholders and the like to increase revenues and profits…

This simply means that if the burden was all on the consumer – he would end up with a lighter (I dare say a much lighter) wallet. It would be similar to a utility – the more you consume, the more you pay. It would be goodbye to the era of unlimited data and bandwidth (even for fixed lines).

Now, I totally get the argument when there is only one provider (a literal monopoly) – then the customer is left with no choice. This is definitely a case which needs some level of regulation to protect the customer from “monopoly extortion”. Even when regulators promote “bit-stream access” – i.e. allowing other parties to use the infrastructure – there is a cost associated with the same. Hence this is more of a pseudo-competition on elements such a branding, customer service etc rather than on the infrastructure itself. The competitor may discount, but at the expense of margins. There always exists a lower price threshold which is agreed with the regulator. Other losers in such a game are those consumers who live in far flung areas – economics of providing such connections eliminate them from any planning (unless forced by the government). In such a case – it becomes a cross-subsidy, with the urban, denser populace subsidizing the rural community. However, these can be served by dedicated high speed wireless connections and in my opinion do not present such a pressing concern.

As everyone is in agreement, the availability and access to high-speed data at a reasonable cost does have a direct and clear impact on the overall economy of a country. If we do not want to continue increasing prices for consumers in order to keep investing in upgrades to infrastructure, who then should shoulder this burden? Even though it would undoubtedly be unfair to burden small and emerging companies by throttling services etc, given on how skewed data traffic is with a few providers (e.g. Netflix etc) consuming the bulk or the traffic – would it be fair they bear a portion of this load? After all, these firms are making healthy profits while bearing none of the cost for the infrastructure.

These aren’t easy questions to answer – but need to be considered in the broader context. One extreme may lie in having national infrastructure networks – but this is easier said than done. A better compromise may be to get both sides to the negotiating table and involve the consumer as well, each recognizing that it is better to bury the hatchet and work out a reasonable plan rather than endless lawsuits.

Once this is accomplished – the Blame Games would end; and hopefully the “Innovation” celebrations would commence!

Why Germany Dominates the U.S. in Innovation …. or does it?

“Germany dominates the US in Innovation” blared the headline from the HBR blog posted by Dan Breznitz who put across several points to illustrate where and how Germany was better than the US in these respects. As a person who has lived and experienced both sides of the pond I beg to differ. The article does incorporate several facts such as the strong manufacturing base and a good work ethos but in mixing innovation with inequality and other issues I think the author has missed the point, and I would like to elaborate why.

On government sponsored research – the author does extol the large benefits of government based applied research at institutes such as Fraunhofer. True, they are indeed great and have provided many an invention – perhaps the most widely known is the MP3 license. However, there are multiple efforts by the US government in the same direction; key difference being that they prefer to fund private firms to carry out the research on their behalf. Let us take the example of the Small Business Innovation Research and compare (all figures taken from their respective websites)

- Number of institutes/ firms funded

- Fraunhofer: 66 institutes and research facilities around Germany

- SBIR grants: Supporting 15000 small firms all around USA

- Number of people (engineers/ scientists) employed

- Fraunhofer: 22,000 staff

- SBIR grants: 400,000 scientists and researchers

- Funding

- Fraunhofer: annual budget around 1.9 Billion Euro

- SBIR: annual $2.5 Billion

As can be seen, one major program in the US is able to employ 20 times more people and support a whole lot more of private enterprise than focusing on a few big institutes. It is this private capital driven mentality that allowed NASA to downsize and opened the doors to many upstarts who are developing new technologies at a fraction of the cost of what it cost the government under NASA (see SpaceX). From my own experience, small and agile firms are able to innovate at an astonishing rate. Although government funded research is nice, they are typically trapped by layers of bureaucracy and inefficiency which limits their productivity.

The second argument around German leadership in manufacturing; if the American’s learnt one thing early was to franchise, scale and mass produce – all at the lowest cost. This was the key determining factor which led to a flight of capital to developing markets such as China and India which became the manufacturing hubs. In recent times as technology innovations such as automation and 3D printing are becoming more wide spread – we can see manufacturing come back (again to provide a cost advantage). What Germany is definitely good at is in manufacturing, but if you talk to the vaunted mittelstands’ there is a distinct fear that as China and India catch up, this technology edge is fast evaporating as the technology is copied (or sadly robbed due to poor control), localized and enhanced to suit the local market. The US took the other route to economic prosperity – focusing on services rather than manufacturing – which has directly led to the huge explosion in this industry, and the innovation around it (I am considering IT more as a service rather than a traditional manufacturing industry).

Yes – this does bring about inequality, because you end up with a high tier service class (who make the cool software etc), and a lower tier class who “serves” (the fast food server etc) and the absence of a strong “manufacturing” middle class as in Germany. But this is not tied to who is the better innovator, but the impact of the choice to focus on a particular aspect of the food chain i.e. services rather than manufacturing. Putting both in the same pot simply befuddles the discussion.

It is also true that Audi, BMW etc do sell well – but within high-tech you can also see that their connected car platforms are in partnership with the likes of Google and Apple (all US companies). And if you want to talk about cars – the US is one of the few places where you can see the emergence of players such as Tesla to challenge the erstwhile giants.

What Germany trumps the US in is in its social benefits – working hard to ensure higher and more egalitarian employment and a fairer distribution of wealth. This is part of a cultural ethos and mindset rather than an outcome of innovation – and definitely a model to be copied. However, pairing this as an outcome of innovation is far fetched.

In the end, I believe both countries have their own strengths and weaknesses and could do well to learn from each other; In innovation – if anything, Germany could take a piece out of the US system.